There has been a significant amount of angst in the media in the last few days over a bad decision Facebook researchers made in 2012. According to data just recently made public, an experiment in which 689,003 Facebook users involved psychological testing. They were put into two experimental groups; one group had their feed manipulated to show mostly positive posts, the other was shown mostly negative posts, then both groups were monitored to see if these individuals would write positive or negative posts themselves. In fact, they found a very slight correlation in which the negative exposure group went on to post more negative posts, and vice versa among the positive group.

Critics of the study say that Facebook was running psychological research on unsuspecting people, probably making everyone in the negative group have a worse day than they would have otherwise. Facebook countered, claiming they were simply trying to better understand how their site works, just as any other web property would test various elements of their website in order to improve it.

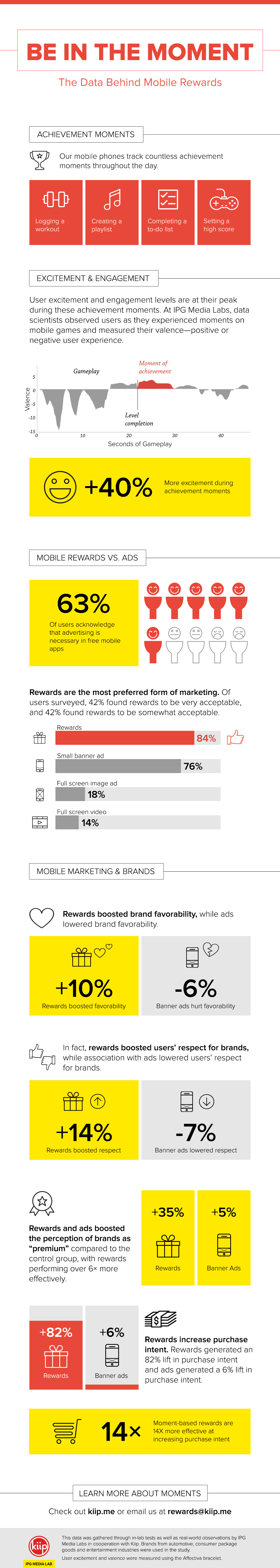

We’re not here to pass judgement on the study itself, but we do want to critique the methodology. Facebook made a few errors here, and they are errors that the IPG Media Lab does not make when performing similar research. When IPG Lab does this kind of research, we recruit paid participants who have opted in to our study. They do not know what we are studying, and have no way of knowing how we are manipulating their experience, but whether the experience is negative or positive, they have explicitly consented to be studied during a set time frame and are being compensated.

Facebook claims that all users have given their consent simply by signing up for Facebook, but reading the actual language, it is not obvious that users are signing up for this kind of research; just a generic phrase telling a site user that their data will be used “for internal operations, including troubleshooting, data analysis, testing, research and service improvement.” This language in no way implies that Facebook will actively manipulate the individual experience, but instead implies that they will passively use data collected.

Worse, according to Forbes, the research opt-in language in their terms of service did not yet exist when the experiment in question took place.